What PIAAC Measures

PIAAC is designed to measure adult skills across a wide range of abilities, from basic reading and numerical calculations to complex problem solving. To achieve this, PIAAC assesses literacy, numeracy, and problem solving. The tasks developed for each domain are authentic, culturally appropriate, and drawn from real-life situations expected to be important or relevant in different contexts. The content and questions within these tasks reflect the purposes of adults’ daily lives across different cultures, as well as the changing nature of complex, digital environments, even if they are not necessarily familiar to all adults in all countries.

In 2023 (Cycle 2), PIAAC continues to assess adults' skills in literacy, including reading components, and numeracy. This approach allows for the measurement of trends and changes in adult skills between the PIAAC 2012/2014 and 2017 (Cycle 1) administrations and PIAAC 2023 (Cycle 2).

Since the first cycle, the digital landscape has evolved significantly, impacting both adults' personal and professional lives. In response to these changes, the literacy and numeracy frameworks were updated with innovative elements that mirror tasks found in digital settings. For example, in literacy, certain tasks now require consulting multiple information sources, including both static and dynamic texts. Similarly, in numeracy, some tasks involve dynamic applications that utilize interactive, digitally based tools.

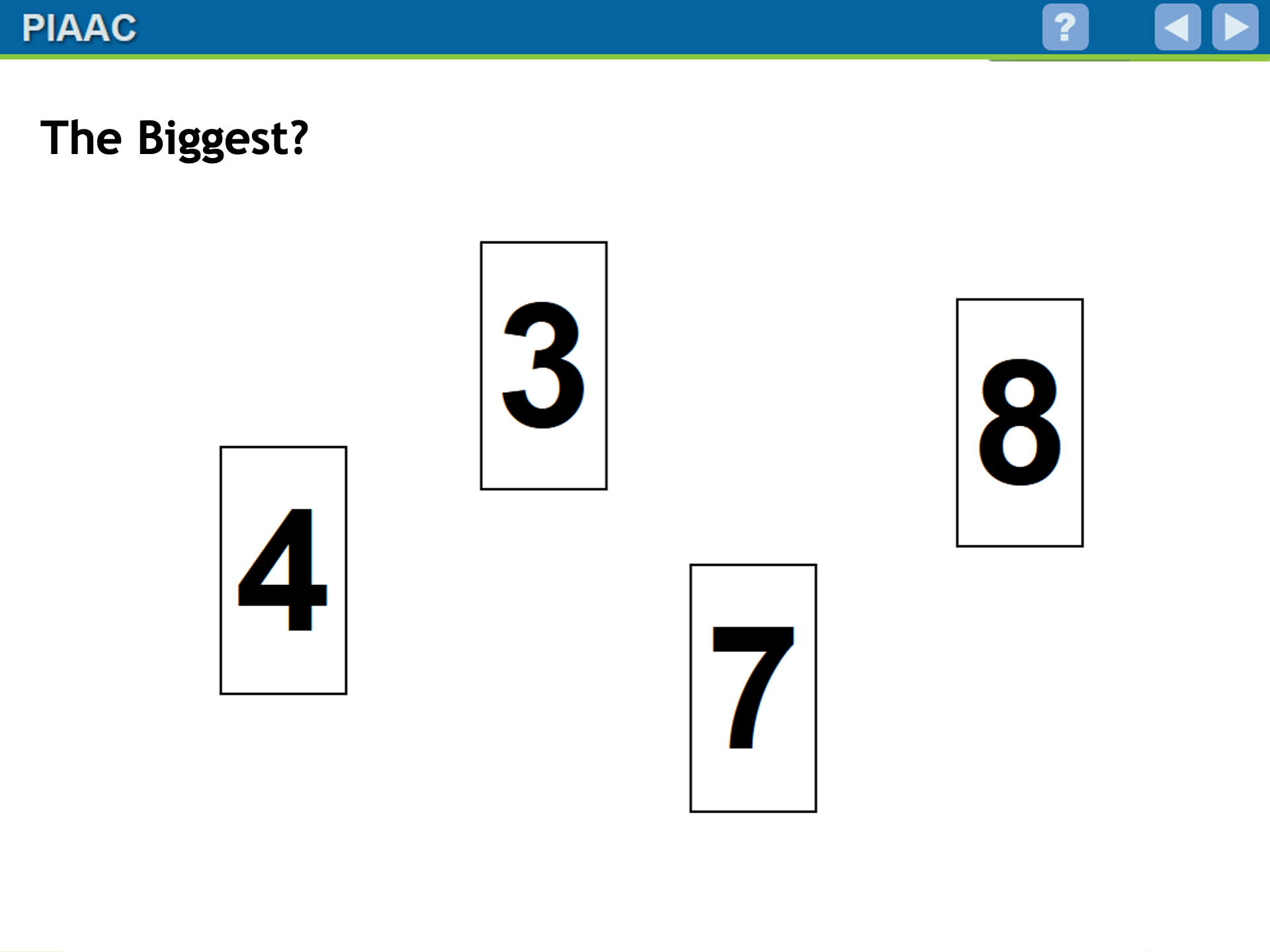

In addition, the PIAAC 2023 assessment introduced a new numeracy component to obtain information on adults with low numeracy skills and replaced digital problem solving with a new domain: adaptive problem solving.

While literacy and numeracy results from the two PIAAC cycles can be compared, the digital problem-solving and adaptive problem-solving domains cannot be compared due to differences in their assessment frameworks and in the skills being measured.

Another major difference between the two cycles is the mode of assessment. In PIAAC Cycle 1, literacy and numeracy were assessed in both a paper-and-pencil format and on computers, while digital problem-solving items were computer-administered and reading components were limited to paper-and-pencil. In Cycle 2, all respondents completed the entire assessment on a tablet (for more details, see How PIAAC is Administered).

For more detailed information about the skills measured in 2023 (Cycle 2) or 2012-2017 (Cycle 1), click on the tabs below.

The PIAAC literacy conceptual framework was developed by panels of experts to guide the development and selection of literacy items and to inform the interpretation of results. It defines the underlying skills that the assessment aims to measure and describes how best to assess skills in the literacy domain. The literacy framework provides an overview of

- how the literacy domain is defined and how this definition differs from that used in PIAAC Cycle 1 and previous international assessments, including the International Adult Literacy Survey (IALS) and the Adult Literacy and Lifeskills Survey (ALL);1

- the range and proficiency levels of literacy skills being measured within the adult population, from very low to very high;

- the conceptual basis guiding the development of the PIAAC literacy tasks, including the types of items to be used;

- the content areas, contexts, and text types assessed, from personal narratives to descriptions and arguments, as well as situations and scenarios in adults’ lives that require literacy.

While the conceptual framework for the literacy domain remains largely unchanged from PIAAC 2012/2014 and 2017 (Cycle 1), it is designed to ensure comparability with PIAAC Cycle 1 and earlier adult literacy assessments. However, the PIAAC 2023 (Cycle 2) literacy experts have updated the framework to reflect the growing importance of reading in digital environments, including various text types (such as digital texts and materials) that require different cognitive demands and pose different challenges for readers. Specifically, the new framework highlights the growing need for readers to engage effectively with the diverse range of texts they encounter online.

It is important to note that the assessment tasks and materials in PIAAC are designed to measure a broad set of foundational skills needed to successfully interact with the range of real-life tasks and materials that adults encounter in everyday life. The resolution of these tasks does not require specialized knowledge or more specific skills: in this sense, the skills assessed in PIAAC can be considered “foundational” or, more appropriately, “general” skills required in a very broad range of situations and domains.

1 IALS and ALL definition: Literacy is using printed and written information to function in society to achieve one's goals and to develop one's knowledge and potential.

In PIAAC Cycle 2, literacy is defined as:

"Literacy is accessing, understanding, evaluating and reflecting on written texts in order to achieve one's goals, to develop one's knowledge and potential and to participate in society."

As described in the PIAAC Cycle 2 literacy framework, the word literacy is taken in its broadest but also most literal sense, to describe the proficient use of written language artifacts such as texts and documents, regardless of the type of activity or interest considered. This characterization of literacy highlights both the universality of written language (i.e., its potential to serve an infinite number of purposes in an infinite number of domains) and the very high specificity of the core ability underlying all literate activities, that is, the ability to read written language.

Some key terms within this definition are explained below.

“accessing…”

Proficient readers are not just able to comprehend the texts they are faced with. They can also reach out to texts that are relevant to their purposes, and search passages of interest within those texts (McCrudden and Schraw, 2007; Rouet and Britt, 2011).

"understanding…"

Most definitions of literacy acknowledge that the primary goal of reading is for the reader to make sense of the contents of the text. This can be as basic as comprehending the meaning of the words, to as complex as comprehending the dispute between two authors making opposite claims on a social-scientific issue.

"evaluating and reflecting…"

Readers need to assess whether the text is appropriate for the task at hand, determining whether it will provide the information they need. Readers also make judgments about the accuracy and reliability of both the content and the source of the message (Bråten,Strømsø and Britt, 2009; Richter, 2015).

"on written text…"

In the context of PIAAC Cycle 2, the phrase "written text" designates pieces of discourse primarily based on written language. Written texts may include non-verbal elements such as charts or illustrations.

"in order to achieve one’s goals,"

Just as written languages were created to meet the needs of emergent civilizations, at an individual level, literacy is primarily a means for one to achieve their goals. Goals relate to personal activities but also to the workplace and to interaction with others.

"to develop one’s knowledge and potential and participate in society."

Developing one's knowledge and potential highlights one of the most powerful consequences of being literate. Written texts may enable people to learn about topics of interest, but also to become skilled at doing things and to understand the rules of engagement with others.

For the complete PIAAC literacy framework, see:

In PIAAC, results are reported as averages on a 500-point scale or as proficiency levels. Proficiency refers to competence that involves “mastery” of a set of abilities along a continuum that ranges from simple to complex information-processing tasks.

This continuum has been divided into six levels of proficiency. By assigning scale values to both individual participants and assessment tasks, it is possible to see how well adults with varying literacy proficiencies performed on tasks of varying difficulty. Although individuals with low proficiency tend to perform well on tasks with difficulty values equivalent to or below their level of proficiency, they are less likely to succeed on tasks receiving higher difficulty values. This means that the more difficult the task relative to each individual’s level of proficiency, the lower the likelihood he or she will respond correctly. Similarly, the higher one’s proficiency on the literacy scale relative to difficulty of the task, the more likely they are to perform well on the task.

The following descriptions summarize the types of tasks that adults at a particular proficiency level can reliably complete successfully.

Description of PIAAC literacy discrete proficiency levels

| Proficiency level and score range | Task descriptions |

|---|---|

| Below Level 1 0–175 points

|

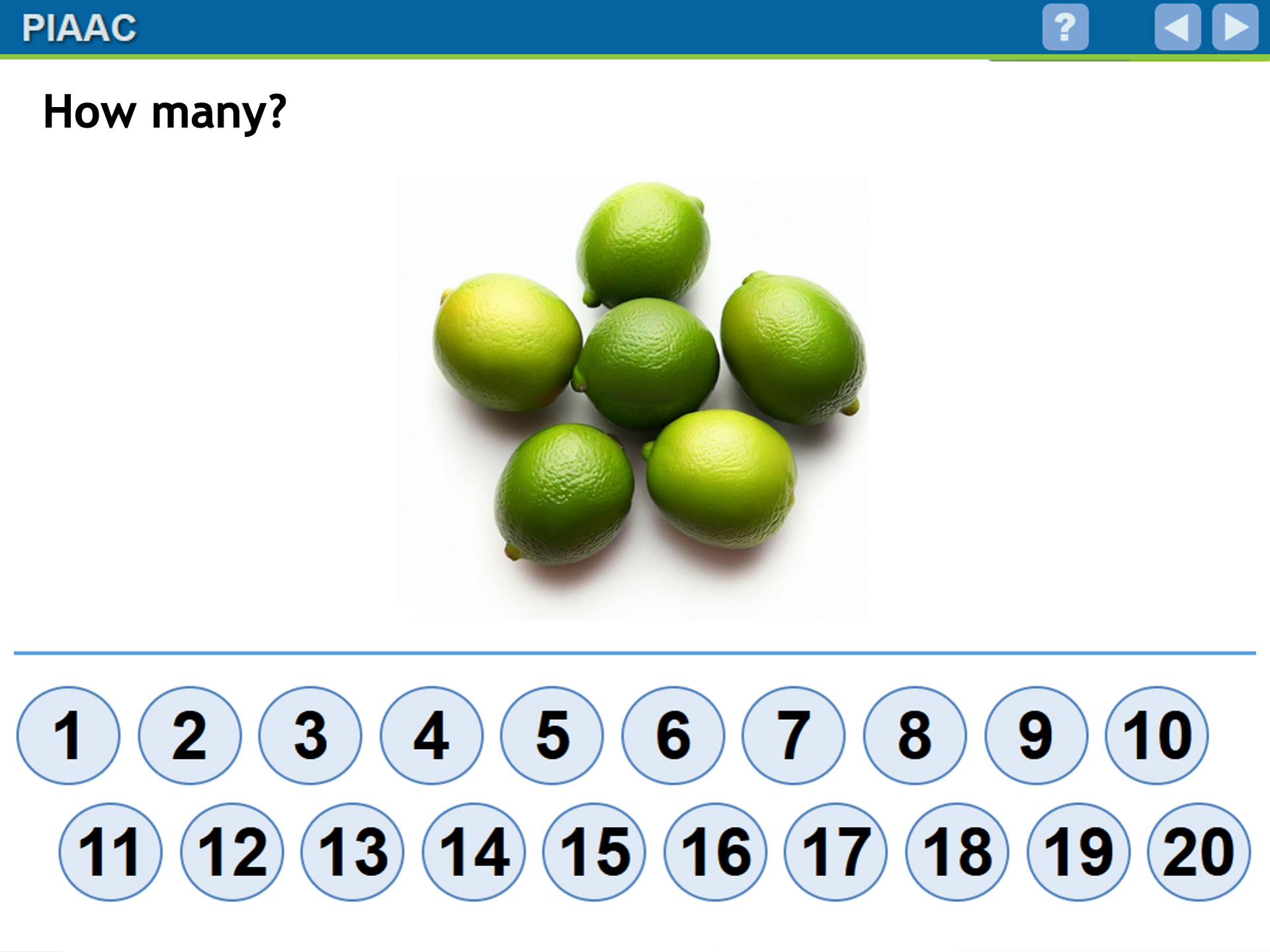

Most adults at Below Level 1 are able to process meaning at the sentence level. Given a series of sentences that increase in complexity, they can tell if a sentence does or does not make sense either in terms of plausibility in the real world (i.e., sentences describing events that can vs. cannot happen), or in terms of the internal logic of the sentence (i.e., sentences that are meaningful vs. not). Most adults at this level are also able to read short, simple paragraphs and, at certain points in text, tell which word among two makes the sentence meaningful and consistent with the rest of the passage. Finally, they can access single words or numbers in very short texts in order to answer simple and explicit questions. The texts at Below Level 1 are very short and include no or just a few familiar structuring devices such as titles or paragraph headers. They do not include any distracting information nor navigation devices specific to digital texts (e.g., menus, links or tabs). Tasks Below Level 1 are simple and very explicit regarding what to do and how to do it. These tasks only require understanding at the sentence level or across two simple adjacent sentences. When the text involves more than one sentence, the task merely requires dealing with target information in the form of a single word or phrase. |

| Level 1 176–225 points

|

Adults at level 1 are able to locate information on a text page, find a relevant link from a website, and identify relevant text among multiple options when the relevant information is explicitly cued. They can understand the meaning of short texts, as well as the organization of lists or multiple sections within a single page. The texts at level 1 may be continuous, noncontinuous, or mixed and pertain to printed or digital environments. They typically include a single page with up to a few hundred words and little or no distracting information. Noncontinuous texts may have a list structure (such as a web search engine results page) or include a small number of independent sections, possibly with pictorial illustrations or simple diagrams. Tasks at Level 1 involve simple questions providing some guidance as to what needs to be done and a single processing step. There is a direct, fairly obvious match between the question and target information in the text, although some tasks may require the examination of more than one piece of information. |

| Level 2 226–275 points

|

At Level 2, adults are able to access and understand information in longer texts with some distracting information. They can navigate within simple multi-page digital texts to access and identify target information from various parts of the text. They can understand by paraphrasing or making inferences, based on single or adjacent pieces of information. Adults at Level 2 can consider more than one criterion or constraint in selecting or generating a response. The texts at this level can include multiple paragraphs distributed over one long or a few short pages, including simple websites. Noncontinuous texts may feature a two-dimension table or a simple flow diagram. Access to target information may require the use of signaling or navigation devices typical of longer print or digital texts. The texts may include some distracting information. Tasks and texts at this level sometimes deal with specific, possibly unfamiliar situations. Tasks require respondents to perform indirect matches between the text and content information, sometimes based on lengthy instructions. Some tasks statements provide little guidance regarding how to perform the task. Task achievement often requires the test taker to either reason about one piece of information or to gather information across multiple processing cycles. |

| Level 3 276–325 points

|

Adults at Level 3 are able to construct meaning across larger chunks of text or perform multi-step operations in order to identify and formulate responses. They can identify, interpret or evaluate one or more pieces of information, often employing varying levels of inferencing. They can combine various processes (accessing, understanding and evaluating) if required by the task . Adults at this level can compare and evaluate multiple pieces of information from the text(s) based on their relevance or credibility. Texts at this level are often dense or lengthy, including continuous, noncontinuous, mixed. Information may be distributed across multiple pages, sometimes arising from multiple sources that provide discrepant information. Understanding rhetorical structures and text signals becomes more central to successfully completing tasks, especially when dealing with complex digital texts that require navigation. The texts may include specific, possibly unfamiliar vocabulary and argumentative structures. Competing information is often present and sometimes salient, though no more than the target information. Tasks require the respondent to identify, interpret, or evaluate one or more pieces of information, and often require varying levels of inferencing. Tasks at Level 3 also often demand that the respondent disregard irrelevant or inappropriate text content to answer accurately. The most complex tasks at this level include lengthy or complex questions requiring the identification of multiple criteria, without clear guidance regarding what has to be done. |

| Level 4 326–375 points

|

At level 4, adults can read long and dense texts presented on multiple pages in order to complete tasks that involve access, understanding, evaluation and reflection about the text(s) contents and sources across multiple processing cycles. Adults at this level can infer what the task is asking based on complex or implicit statements. Successful task completion often requires the production of knowledge-based inferences. Texts and tasks at Level 4 may deal with abstract and unfamiliar situations. They often feature both lengthy contents and a large amount of distracting information, which is sometimes as prominent as the information required to complete the task. At this level, adults are able to reason based on intrinsically complex questions that share only indirect matches with the text contents, and/or require taking into consideration several pieces of information dispersed throughout the materials. Tasks may require evaluating subtle evidence-claims or persuasive discourse relationships. Conditional information is frequently present in tasks at this level and must be taken into consideration by the respondent. Response modes may involve assessing or sorting complex assertions. |

| Level 5 376–500 points

|

Above level 4, the assessment provides no direct information on what adults can do. This is mostly because feasibility concerns (especially with respect to testing time) precluded the inclusion of highly complex tasks involving complex interrelated goal structures, very long or complex document sets, or advanced access devices such as intact catalogs, deep menu structures or search engines. These tasks, however, form part of the construct of literacy in today's world, and future assessments aiming at a better coverage of the upper end of the proficiency scale may seek to include testing units tapping on literacy skills above Level 4. From the characteristics of the most difficult tasks at Level 4, some suggestions regarding what constitutes proficiency above Level 4 may be offered. Adults above Level 4 may be able to reason about the task itself, setting up reading goals based on complex and implicit requests. They can presumably search for and integrate information across multiple, dense texts containing distracting information in prominent positions. They are able to construct syntheses of similar and contrasting ideas or points of view; or evaluate evidence-based arguments and the reliability of unfamiliar information sources. Tasks above Level 4 may also require the application and evaluation of abstract ideas and relationships. Evaluating reliability of evidentiary sources and selecting not just topically relevant but also trustworthy information may be key to achievement. |

NOTE: Every test item is located at a point on the proficiency scale based on its relative difficulty. The easiest items are those located at a point within the score range below level 1 (i.e., 175 or less); the most difficult items are those located at a point at or above the threshold for level 5 (i.e., 376 points). An individual with a proficiency score that matches a test item’s scale score value has a 67 percent chance of successfully completing that test item. This individual will also be able to complete more difficult items (those with higher values on the scale) with a lower probability of success and easier items (those with lower values on the scale) with a greater chance of success.

In general, this means that tasks located at a particular proficiency level can be successfully completed by the “average” person at that level approximately two-thirds of the time. However, individuals scoring at the bottom of the level would successfully complete tasks at that level only about half the time while individuals scoring at the top of the level would successfully complete tasks at the level about 80 percent of the time. Information about the procedures used to set the achievement levels is available in the OECD PIAAC Technical Report.

SOURCE: OECD 2023 Survey of Adult Skills International Report

In Cycle 2, the assessment is delivered on a tablet device.2 The assessment interface has been designed to ensure that most, if not all, respondents are able to take the assessment on the tablet even if they have limited experience with such devices.

The PIAAC literacy assessment tasks are organized along a set of dimensions that ensure broad coverage and a precise description of what people can do at each level of proficiency.

The following tables show the distribution of the 80 literacy items selected for the final item set in PIAAC across different dimensions.

Distribution of PIAAC Cycle 2 literacy items by cognitive strategy

| Number | Percent | |

| Access | 30 | 38% |

| Understand | 35 | 44% |

| Evaluate and reflect | 15 | 19% |

| Total | 80 | 100% |

Source: OECD 2023 PIAAC Reader’s Companion

Distribution of PIAAC Cycle 2 literacy items by text source

| Number | Percent | |

| Single | 51 | 64% |

| Multiple | 29 | 36% |

| Total | 80 | 100% |

Source: OECD 2023 PIAAC Reader’s Companion

Distribution of PIAAC Cycle 2 literacy items by text format

| Number | Percent | |

| Continuous | 40 | 50% |

| Noncontinuous | 25 | 31% |

| Mixed | 15 | 19% |

| Total | 80 | 100% |

Source: OECD 2023 PIAAC Reader’s Companion

Distribution of PIAAC Cycle 2 literacy items by context

| Number | Percent | |

| Work | 9 | 11% |

| Personal | 33 | 41% |

| Community | 28 | 35% |

| Education and training | 10 | 13% |

| Total | 80 | 100% |

Source: OECD 2023 PIAAC Reader’s Companion

2 In the first cycle of the study, the assessment could be completed on a laptop computer or in paper-and-pencil format. The computer-based assessment (CBA) format constituted the default format, with the paper-based assessment (PBA) option being made available to those respondents who had little or no familiarity with computers, had poor information communications technology (ICT) skills, or did not wish to take the assessment on computer. In the second cycle, all countries administered the assessment on a tablet.

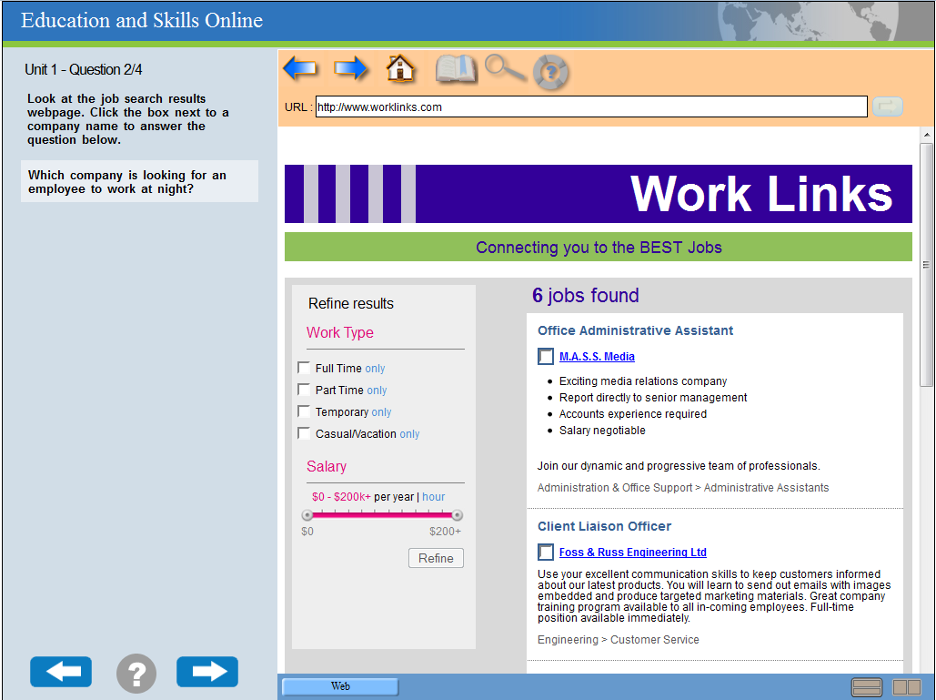

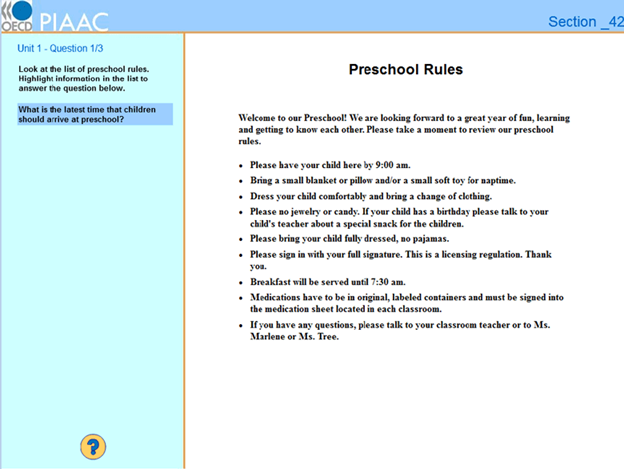

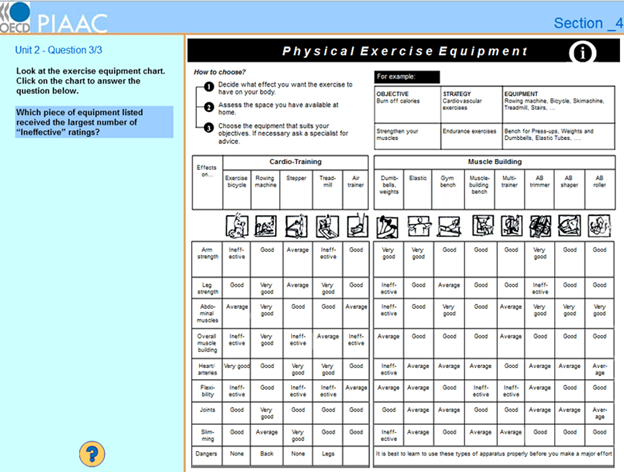

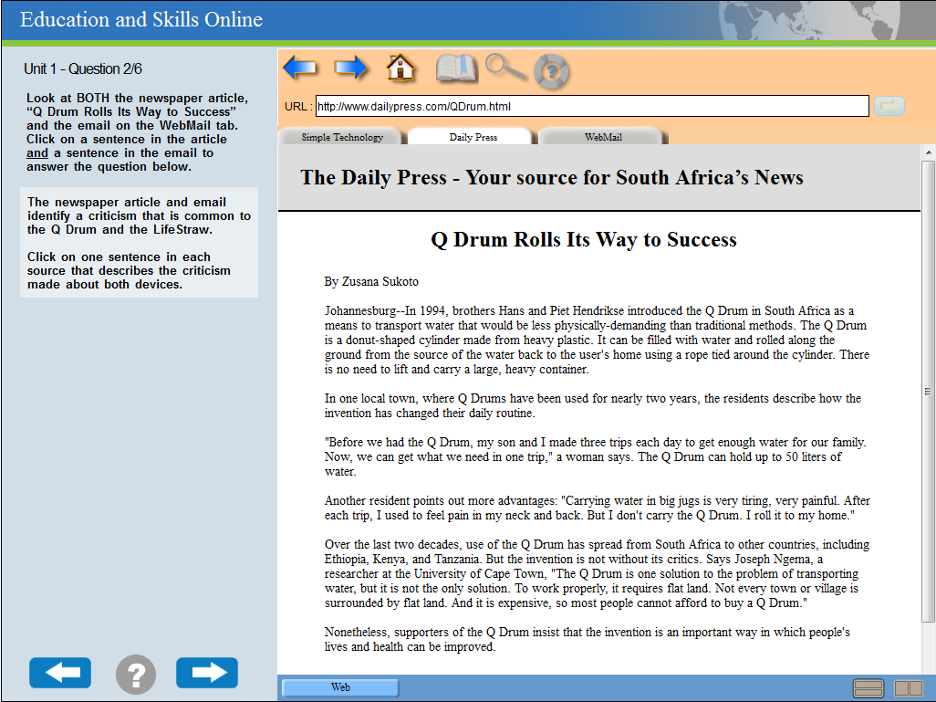

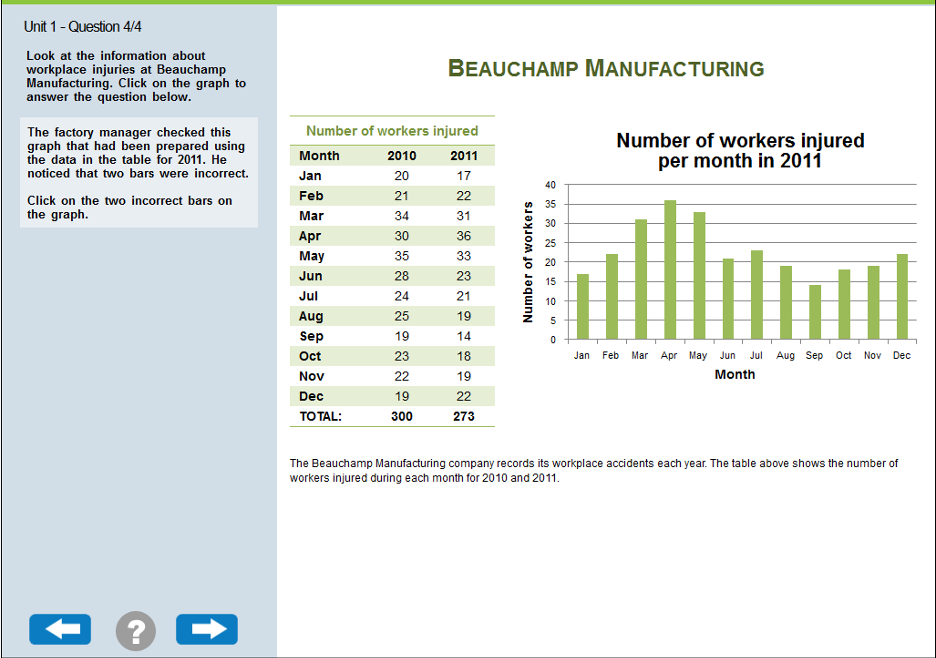

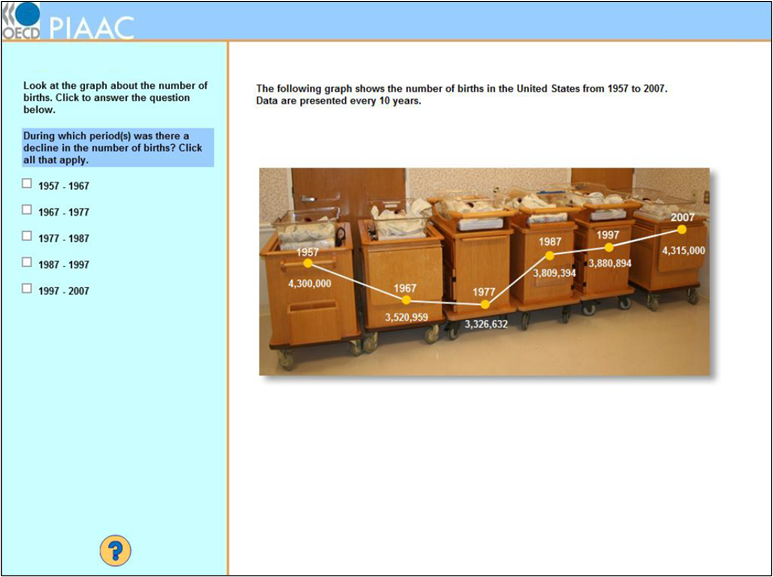

Examples of literacy items included in Cycle 2 are presented as screenshots of the displays that appear on the tablet used to deliver the assessment. These items were not administered in either the Field Test or Main Study; while no PIAAC proficiency levels are available for these items, estimated difficulty levels are provided. To view and interact with the full set of sample items, see the PIAAC released items.

Sample Item 1: Bread and Crackers

This example, the first of three items in this unit, represents a low difficulty level.

SOURCE: OECD (2024). PIAAC Cycle 2 Released Cognitive Items.

Sample Item 2: Bread and Crackers

The second item represents a moderate to high difficulty level.

SOURCE: OECD (2024). PIAAC Cycle 2 Released Cognitive Items.

Sample Item 3: Bread and Crackers

This item represents a moderate to high difficulty level.

SOURCE: OECD (2024). PIAAC Cycle 2 Released Cognitive Items.

Sample Item 4: Preschool Rules

This item represents a low difficulty level.

SOURCE: OECD (2024). PIAAC Cycle 2 Released Cognitive Items.

Sample Item 5: Preschool Rules

This item represents a moderate difficulty level.

SOURCE: OECD (2024). PIAAC Cycle 2 Released Cognitive Items.

References

Bråten, I., Strømsø, H., and Britt, M. (2009). Trust Matters: Examining the Role of Source Evaluation in Students’ Construction of Meaning Within and Across Multiple Texts. Reading Research Quarterly, 44(1), 6-28. http://dx.doi.org/10.1598/rrq.44.1.1

McCrudden, M., and Schraw, G. (2007). Relevance and Goal-Focusing in Text Processing. Educational Psychology Review, 19(2), 113-139. http://dx.doi.org/10.1007/s10648-006-9010-7

Richter, T. (2015). Validation and Comprehension of Text Information: Two Sides of the Same Coin. Discourse Processes, 52(5-6), 337-355. http://dx.doi.org/10.1080/0163853x.2015.1025665

Rouet, J., and Britt, M. (2011). Relevance Processes in Multiple Document Comprehension. In M. McCrudden, J. Magliano, and G. Schraw (Eds.), Text Relevance and Learning from Text. Greenwich, CT: Information Age Publishing.